Multi-modal Maritime Perception

SeePerSea -- Multi-modal Perception Dataset of In-water Objects for Autonomous Surface Vehicles

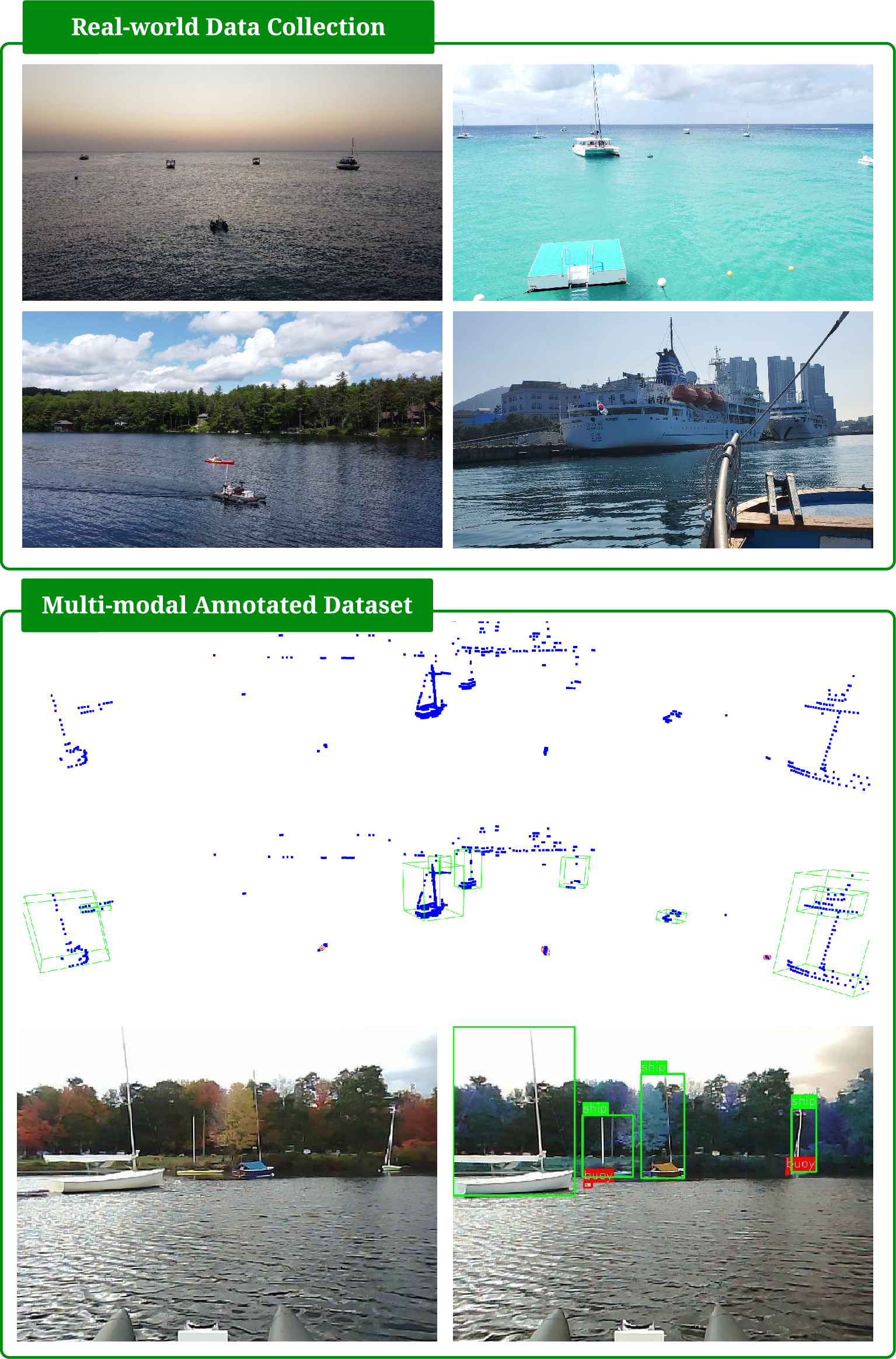

This paper introduces the first publicly accessible labeled multi-modal

perception dataset for autonomous maritime navigation,

focusing on in-water obstacles within the aquatic environment to enhance

situational awareness for Autonomous Surface Vehicles (ASVs).

This dataset, collected over 4 years and consisting of diverse objects

encountered under varying environmental conditions, aims to bridge the research gap

in autonomous surface vehicles by providing a multi-modal, annotated,

and ego-centric perception dataset, for object detection and classification.

We also show the applicability of the proposed dataset by training deep learning-based

open-source perception algorithms that have shown success. We expect that our dataset

will contribute to development of the marine autonomy pipelines

and marine (field) robotics.

First multi-modal dataset

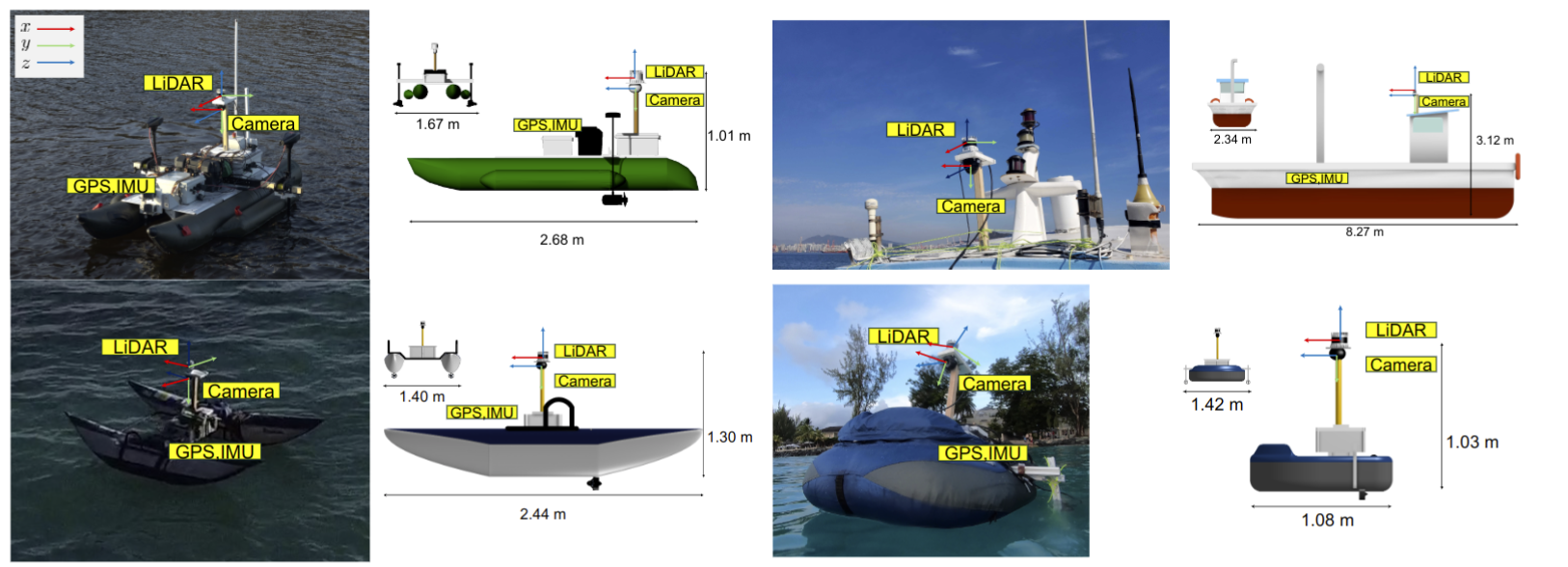

Our platforms for data collections

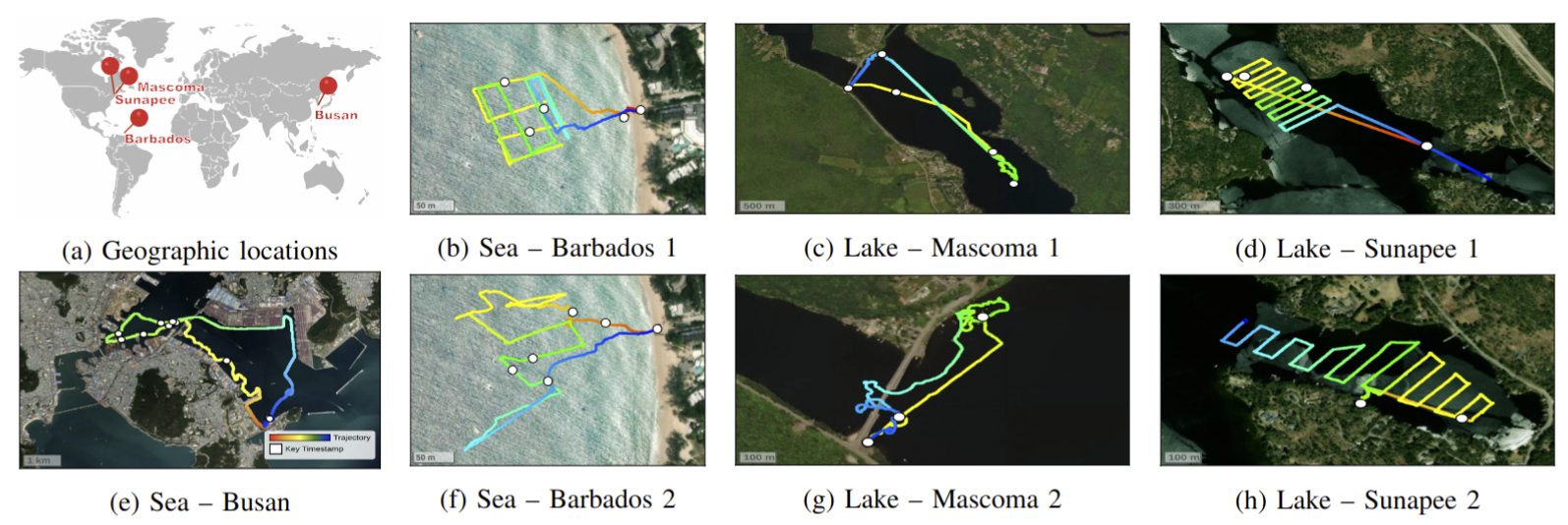

Geographic locations for our dataset

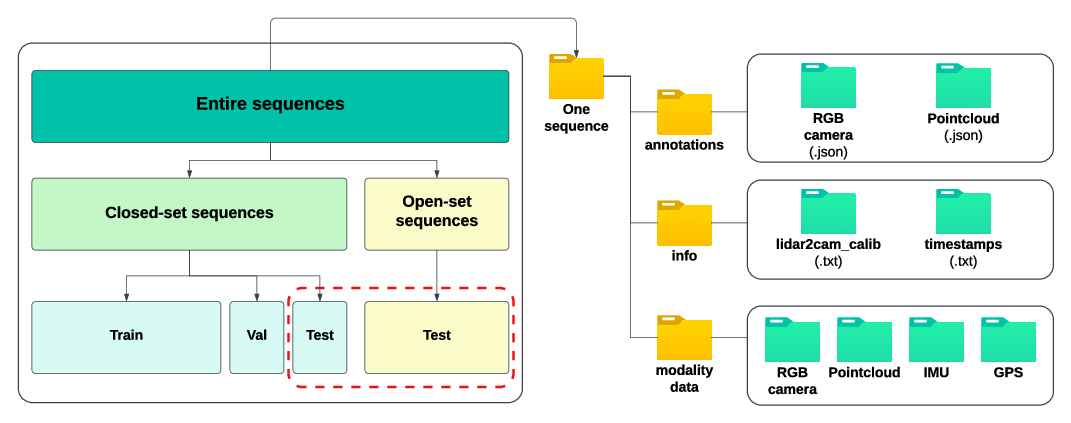

Folder structure of our dataset